"Review: Hooked: Inside the Murky World of Australia’s Gambling Industry –

Quentin Beresford (NewSouth)

cory Doctorow wrote an insightful blog post about code being a liability not an asset [2].

Aigars Mahinovs wrote an interesting review of the BMW i4 M50 xDrive and the BMW i5 eDrive40 which seem like very impressive vehicles [3]. I was wondering what BMW would do now that all the features they had in the 90s have been copied by cheaper brands but they have managed to do new and exciting things.

The Acknowledgements section from the Scheme Shell (scsh) reference is epic [9].

Vice has an insightful article on research about “do your own research” and how simple Google searches tend to reinforce conspiracy theories [10]. A problem with Google is that it’s most effective if you already know the answer.

The Proof has an interesting article about eating oysters and mussels as a vegan [15].

A SWAY session by Joanne of Royal Far West School. http://sway.org.au/ via https://coviu.com/ SWAY is an oral language and literacy program based on Aboriginal knowledge, culture and stories. It has been developed by Educators, Aboriginal Education Officers and Speech Pathologists at the Royal Far West School in Manly, NSW.

Category: Array

Uploaded by: Silvia Pfeiffer

Hosted: youtube

Category: 2

Uploaded by: Silvia Pfeiffer

Hosted: youtube

This screencast shows how a user of the PARADISEC catalog logs in and explores the collections, items and files that the archive contains.

Category: 2

Uploaded by: Silvia Pfeiffer

Hosted: youtube

Screencast of how to use the PARADISEC catalog for managing and publishing collections.

Category: 2

Uploaded by: Silvia Pfeiffer

Hosted: youtube

Screencast of how a PARADISEC administrator uses the PARADISEC catalog for managing the consistency of metadata and staying on top of uploaded files.

Category: 2

Uploaded by: Silvia Pfeiffer

Hosted: youtube

This presentation covers the science of Earth's "energy budget" of heat inputs and losses, and describes the overall climate system. It continues with a description of the Industrial Age, the introduction of direct temperature measurement, and the resulting temperature rise from burning fossil fuels. This is followed by a description of how Greenhouse Gases operate on a molecular level, the increase since the pre-industrial period, and the carbon cycle. After this, human activity and projections are considered, followed by changes to species' habitats and the possibility of an Anthropocene Extinction Event, then energy trajectories and future global policy directions. Concluding remarks identify climate change as a critical issue and one subject to "race conditions", and note that the policy route, whilst necessary, is currently falling short of requirements.

This was a presentation to Future Day 2026, March 2-4. A transcript is provided along with the accompanying slide deck.

Transcript:

http://levlafayette.com/files/2026FutureDayGlobalClimateTranscript.pdf

Slides:

http://levlafayette.com/files/2026FutureDay_GlobalClimate.pdf

| Attachment | Size |

|---|---|

| 87.13 KB | |

| 1.37 MB |

Continuations 2026/09: Body parsing

Continuations 2026/09: Body parsingThis week I finished a particularly satisfying piece of work: adding request body parsing to Hanami Action. This finishes the story we started back in Hanami 2.3, where we significantly improved Hanami Action’s formats handling. Now you can also use formats to ensure your bodies are appropriately parsed for your expected media type handling.

With this change, Hanami Actions can now serve as fully standalone mini Rack apps, along with everything you’d expect from params handling. It also means we can do away with the one-step-too-far-removed-and-awkward-to-configure body parser middleware that until now we’ve needed in full Hanami apps. Step by step, things get cleaner and better organised!

I had the pleasure of reviewing a range of things this week. Carolyn Cole added an “update” action to our getting started guide (thank you Carolyn!), Adam Ransom gave us two nice bug fixes for Dry Schema’s JSON Schema support (thank you Adam!), and Ramón Valles courteously removed my egregious (but working!) hack from Hanami Webconsole now that binding_of_caller officially supports Ruby 4 (thank you Ramón!).

Katafrakt Korner: Paweł continues his uplift of our view layer, this week merging support for user-configurable default template engines (a new setting plus changes to the generators). And why not also a nice Dry Struct performance improvement while he was at it? Thank you Paweł!

I got back to some work I started on Dry Operation a couple of weeks ago, wrapping up and merging support for a wider range of monads. I have a half-finished “Migrating from Dry Transaction” guide I’m writing, and then after that we can make a really nice Dry Operation release and make a little splash about it.

Site work continues. Jojo merged redirects from our old sites, and is working on a TOC for blog posts (I can’t wait to use that one; thank you Jojo!). I also finished my second pass of all our landing page copy. Copywise, all that’s left for me now is community and sponsorship pages, and any other small bits and bobs.

There’s a lot going on, so I spent a bit of time on Friday taking stock of where we’re at. I think I’m broadly happy with the major features I’ve broken ground on for Hanami 2.4 in May: built-in i18n support, a rebooted Hanami Mailer, and Hanami Minitest. Finishing these off and integrating them up and down our stack is at least a month of work.

Along with that, we also have some good internal uplifts: this week’s Hanami Action body parsing, plus improved static asset detection in Hanami Assets’ watch mode (which I’ve started, but which still needs proper vetting).

If I could squeeze in two more things, it would be memoizing all our container components (feels reasonable), and improving the consistency and configurability of our logging (probably more than I can chew right now). I’m in half a mind to just to get started on these to see if I can find a way to finish them in time for the release, along with everything else. I’ve probably already sniped myself into doing it just be writing this.

Thanks to the friendly Gem.coop folks, I’ve registered the @hanami, @dry, and @rom namespaces on the Gem.coop beta. Looking forward to setting up some dual publishing to those soon!

Andy sent me this post about how Carl Kolon uses and thinks about coding agents. The post is pretty interesting, and when I went to reply to Andy I realized that at seven paragraphs perhaps the reply is better suited to here than a signal message. Also, because that is more friendly than replying to messages with links to your blog to drive traffic and the adoration of your readers? This is of course a forward looking statement for me, I do indeed hope one day to have a reader here but baby steps.

Similarly to Carl I certainly started using LLMs as “smarter search”, cutting and pasting queries into claude.ai, and waiting patiently for an answer. I think this should make Google very worried, especially as its so good at finding answers. The improved performance over a “raw Google” is largely because of persistence — the LLM doesn’t perform single search, it will keep searching until it finds the answer.

The decreasing levels of supervision Carl talks about is what I am talking about when I talk about prompts and planning, which has been my favourite LLM topic for the last few weeks. Increasingly I am writing a plan for a feature with the LLM, and then asking it to implement it. I also find the structure of these planning documents is super important, and I am iterating to a quite structured style for those. Assuming that I have good unit and functional tests enforced by a pre-commit, I cannot remember a recent time where there’s been a serious bug which wasn’t a design flaw in that planning process. The days of trying to read a terabyte of data into RAM seem to be over.

I think this might also be why I have gotten into understanding the underlying mechanics of things so much recently. Not only is it interesting to me, but I no longer need to be too concerned with high level details like how to do CSS for a single status dashboard. This frees me up to think about more fundamental things like “what even is a VM”? I think I always would have dug into those things if I’d had the time, its just that LLMs are the tools that freed up that time for me.

The big barrier for me right now is that its rare for me to let the LLM run in unsupervised mode while implementing. I do it with one personal project as a deliberate experiment, and maybe some random personal scripts, but nothing else. I think that’s really slowing me down at this point because I spend all day reading diffs and almost always saying yes. I do occasionally catch things that should have been decided in planning, so its hard to stop though. I think the really productive people are probably better at planning than me and therefore can just let it do its thing. Or maybe they have more bugs and don’t notice?

The specific personal project I am letting the LLM run unsupervised on I think is a special case for now because the guard rails are so concrete — the project is cloning an existing tool while implementing it in a much more sandboxed manner. That means that for any given possible target command line, if the output is different with my project that’s a bug and should be fixed. Claude has written something like 760 tests so far asserting this thing, and when it finds a delta it just fixes it. Does it matter if the implementation is a little weird if the output is concretely provably correct?

The biggest gap I see in for that project is that I need to prompt it at the end of a session to pay down debt before I push the PR to CI. My current prompt for that is this:

Thanks for your work on this. I appreciate it. Some final checks before I push the PR:

* Did the changes in this branch introduce any significant amount of duplicated code? Are there any missed opportunities for code reuse or refactoring?

* Has docs/ been updated?

* Is there unit and functional test coverage?

* Are we sure that all rust and python tests are run by both the pre-commit and CI? We’ve had historical problems with missing the guest operation rust code for example.

* All tests should pass. We need to fix any failing tests now before we commit.

* What tests are skipped? Could we reduce that number?

* Are there any TODO comments we should address as part of this work?

* Is all deferred work and pre-existing errors listed in a plan file?

Now, here’s the bit that worries me right now. I’ve just said the important part is the planning process, and that’s where you catch design flaws, avoid bugs, and lay down the guardrails that seem to be vital to a good outcome. That’s a thing I can do because I’ve been writing software for at least 38 years. What of the juniors of the world? How do they develop those skills without the battle scars of having done it the dumb way for a very long time?

Continuations 2026/08: Great feedback

Continuations 2026/08: Great feedbackOops, nearly missed these weeknotes. Let me make this a quick one just to sneak it in and keep the streak alive (6 months and counting!)

My big achievement this week was getting Hanami Minitest ready for feedback. Check out my post for a preview of the generated files and where I’m looking for help. This has already generated a whole lot of great feedback and discussion. Thank you everyone for sharing your thoughts!

I had a couple of very old hanami-rspec preview releases yanked from RubyGems.org (thank you Colby!), so now they no longer confuse the bundle outdated command.

Aaron added a nice new feature to Hanami CLI: a --name option to allow the app name to be customised. I reviewed his PR, which he promptly merged afterwards. Thanks Aaron!

Looked into a potential issue with slice actions not inheriting default headers, but this turned out to be not the case. The report (from Michael Adams) was a good one though, because it highlighted that there may be some issues with our inherited config in a slice-only app that chooses not to have a single shared base class. I’ll look some more into this in the future.

Katafrakt Korner: I merged this nice docs improvement for our upcoming Dry Types docs. Thanks Paweł!

Work has picked up again on the new website! On the weekend I had a good chat with Max, where we talked through a few of the last details for the new home and landing page designs. I’m hoping that March will be our month for the launch.

It is hard to transition from one gitlab machine to another, especially if you have a stupidly large OCI registry using the new metadata database. If you happen to need to dump the metadata postgresql database from an omnibus container, may I comment this pattern to you:

$ docker exec -u registry -it gitlab /bin/bash

$ cd /var/opt/gitlab/postgresql/

$ pg_dump -U registry -h /var/opt/gitlab/postgresql registry > registry.sqlThat’s an hour of my life I’m never getting back right there.

This disturbing and amusing article describes how an Open AI investor appears to be having psychological problems releated to SCP based text generated by ChatGPT [2]. Definitely going to be a recursive problem as people who believe in it invest in it.

interesting analysis of dbus and design for a more secure replacement [3].

Ploum wrote an insightful article about the problems caused by the Github monopoly [5]. Radicale sounds interesting.

Niki Tonsky write an interesting article about the UI problems with Tahoe (latest MacOS release) due to trying to make an icon for everything [6]. They have a really good writing style as well as being well researched.

This video about designing a C64 laptop is a masterclass in computer design [9].

Ron Garrett wrote an insightful blog post about abortion [11].

Bruce Schneier and Nathan E. Sanders wrote an insightful article about the potential of LLM systems for advertising and enshittification [12]. We need serious legislation about this ASAP!

Continuations 2026/07: Validation extension

Continuations 2026/07: Validation extensionThis week I merged Hanami’s i18n support. Thanks to some good feedback from Trung Lê, I added support for fallbacks before merging. Thank you Trung!

This is a big step for our i18n feature, but it’s not the last. To round it out, we’ll need to add i18n awareness to our view layer, plus generators for new apps and slices. Hopefully we can get them sorted across the remainder of February.

I also merged a nice improvement to Dry Operation: a shiny new validation extension from Aaron Allen. Aaron did a great job getting all the essentials in place, and I spent some time in the last week getting everything just so before calling it done. Thank you Aaron!

While I was in working in Dry Operation, I added support for a wider range of monads, plus started documenting how to migrate from Dry Transaction. These will all make for a very nice new release in a week or so!

I merged some nice documentation enhancements from Paweł for our new site: docs for Dry::Core::Container, plus importing the Dry CLI docs. We also saw the JRuby folks land a fix to the bug that Paweł reported a few weeks ago!

Ben Sheldon left some great feedback on my Hanami Mailer rebuild. I took the time to consider it and leave some thoughts. I hope that we’ll be able to address a lot of what Ben was looking for. Thank you Ben for sharing!

Tim’s Automation Corner: I added a workflow to automatically create new guide versions in response to releases being published. I love how our new release system is helping everything come together like this!

Yesterday my time I experienced what I think was my first LLM hallucinating a responsible security disclosure. Honestly it was no curl situation, but I think it was still interesting. The bug is on launchpad.net if you’re interested in taking a look. I think in total I spent a couple of hours on the whole thing, with the hardest bit being trying to understand what the author was claiming. Fundamentally they had conflated being able to change the state of memory and other hardware inside their virtual machine with changing the state of those things for the hypervisor. They did not seem to understand that the video memory of the guest was not the video memory of the host for example. That said, I tried to be nice and I hope my replies were perhaps a little useful to them.

This happened circa what, 2008? 2007? I forget now. Anyway, it was a while ago now - and it wasn't my fault - but at the time it was pretty "wtf oh shit I'm in so much trouble" scary levels of scary.

So here we go.

Around that time I was running a little consulting / hosting company. I went into the data centre which hosted my equipment to install a second hand Dell I had acquired and tested. I plugged it into the rack, then plugged in the power, then pushed the on button.

Then bang. Then everything went dark. Then bang again.

Then I get a phone call on the VOIP phone in the data centre. It was their owners, asking me what the fuck I had done.

Anyway, it turned out that everything was dead. It wasn't just that the circuit breakers had tripped. A large part of their power equipment in the DC had also gone.

So yes, I was blamed for taking out a whole data centre. By plugging in one Dell server.

But why was I not in court over it? Why am I not still paying back the damages? Well, it turns out there's way, way more to the story.

First up they added a new rule - "You need to test equipment at this outlet/breaker before you install it." Cool, my server definitely passed that test. It worked just fine.

But then it turns out that although my rack was perfectly under the rated current limits, the other racks were not. Like, in any meaningful way. There were some other customers way, way over their allotted power. So when I plugged in my server - again, I'm way way under my own rack power allotment - I tripped some breaker on the distribution board.

Tripping that breaker meant that the other phase now took the brunt of the load. Again, my rack is fine, but everyone elses was apparently not, so it .. pulled very hard on that rail. And it immediately tripped the second phase breaker.

But that wasn't it.

Then, the second click was when they tried remote flipping the breakers back on. The massive draw of power on phases when the computers in the data centre were powered back up caused some part of their power distribution setup to just plain fail. I forget the exact details here; I think the UPS was pulled on pretty hard too in that instant and I vaguely recall it also got cooked.

I had like, four? servers, a router and a switch. I was definitely not going to cause inrush problems. But the big hosting customers? Apparently they .. had more. Much, much more. Now this isn't my first rodeo when it comes to power sequencing of servers in a data centre - I had done this for like, a LOT of Sun T1s in circa 2000, staging how to turn them on a rack at a time upon a full power-off / power-on cycle event. But apparently this wasn't done by either the data centre or the hosting customers. All power, all on, all at once.

Now, I'm a small fry customer with one rack still paying the early adopter pricing. The companies in question had a lot more racks and were paying a lot more. So, this was all mostly swept under the rug, I stopped being blamed, and over the next few months we all got emails from the data centre telling us about the "new, very enforced power limits per rack, and we're going to keep an eye on it."

Anyway, fun times from ye olde past when I was doing dumb stuff but people were making much more money doing much dumber stuff at times.

In the early 2000s I was in my mid 20s, working a dead end job as a Windows programmer, and had two very young kids who were not super good at sleeping. I had worked as what I would now call a systems programmer for the Australian patents and trade marks office for a few years in the late 1990s doing low level image manipulation code — we had a for the time quite impressive database of scanned images of patents and trademarks, and sometimes we need to do things like turn them into PDFs or import a weird made up image format from the Japanese patents office.

So when you combined those things — previous experience in a field I found interesting, a job I did not currently find interesting, and a lot of spare time very early in the morning because the kids wouldn’t sleep but my wife really did need a rest — you end up with a Michael who spent a lot of time writing image manipulation code on his own time. Even back then I was pretty into Open Source, so I released what I think was probably the first Open Source PDF generation library, as well as a series of imaging tools such as pngtools. pngtools was modeled on the libtiff tifftools which I had used extensively at work.

And then things changed. I wrote a couple of books. I got hired by Google and moved to California. The kids got a bit older and life got a bit more complicated… And pngtools just sort of sat there. Except, many Linux distributions had packaged it by then. I guess I had kind of hoped that someone else with more time or passion for the project would reach out and ask to take it over, but that simply never happened. A few years ago I felt sufficient guilt to dig out the SVN repo from backups and convert it to a git repo on github, but apart from occasionally merging a PR from some random on the internet nothing has really happened to pngtools in literally decades.

I think this is where we get to the “mental health” bit of the title — I’ve been actively contributing to Open Source for nearly 30 years, but I can’t really explain what I got in return apart from the occasional dopamine hit. I think I could argue that Google wouldn’t have hired me without my Open Source work, but that’s probably also not entirely true. They hired lots of other people with no history of Open Source contributions. I think overall my lived experience is that I would often have been better off personally not contributing my code publicly — I grow tired of people’s poor social skills and having to fight to get bugs fixed. In a world where its much easier to write non-trivial things with LLM tooling, I am genuinely unsure if its worth the effort of trying to collaborate with people who clearly do not want to collaborate with me. I am sure some of this is my fault, certainly I think I am more sensitive to rejection that your average bear probably because of ADHD, but it can’t all by my fault.

So why would I pick pngtools back up now? Well there’s a latent sense that it needs some love and that no one else is going to do it, but also those LLM tools from the previous paragraph actually make it a lot easier to rebuild state than previously. I don’t really need to deeply understand all the code in a language I haven’t written in for 20 years. I can just supervise a robot that does it. I know its fashionable to hate on the LLM development tools, but honestly that always seems to come from someone who hasn’t actually tried them and is ultimately scared for their job. I don’t think the tools replace me, they simply make me a lot more productive — just like web search did when first introduced.

I should be clear to say I see a distinction between tightly bounded problem spaces with clear definitions of correct like coding, and AI generation of art or English essays. I think those later fields are much more complicated both in terms of correctness and ethics.

And anyways, that’s how you end up with pngtools v1.0 after a mere 25 years.

Continuations 2026/06: Mailer rebuild

Continuations 2026/06: Mailer rebuildThe highlight of this week was sharing my Hanami Mailer rebuild. If you’re interested in how our mailers will fit alongside actions and views (and reuse the latter!), check it out. I wrote up all the different ways you can use the API, so you can get a sense of it all without even going into the code.

My next step here is to wait for any feedback from the other Hanami maintainers. In a week or so I’ll merge this and sort out the full framework integration story. Once that’s done we can start to make more noise about it and hopefully get some real user testing.

I made some releases this week! Hanami CLI v2.3.5 includes a fix to make asset paths work better with “sandboxed” Node.js setups. Thanks Hailey for the fix! Dry Operation v1.1.1 enhances our transaction support and makes it possible to provide extra transaction options at both class and per-transaction levels. Thanks Armin for this improvement! And Dry Types v1.9.1 includes a workaround for a JRuby bug. Thanks Paweł for continuing to improve our JRuby support!

Last week, I filed a bug with Parklife about a peculiarity with the way we were using it (using dotted version numbers in our URLs). This week, I got to test the fix! It works brilliantly, and now I’ll get to remove this patch from our codebase. Thank you Ben for one of my favourite bits of software!

Still on the site, I reviewed our backend-focused tickets and shared a bunch with Jojo for the time she’s continuing to spend on it (there’s actually not much left on the critical path for launch, which is great!). And Max has come back with a revision to our designs, which I’ll review tomorrow.

I finally got to spend some time properly reviewing Aaron’s validation extension for Dry Operation. I know this is going to be a much loved feature (thank you Aaron for building it!), so I’m glad I can spend the time to help make it as good as possible.

We hit a major milestone this week. I ported Hanami Assets, DB, Releoader, RSpec, Utils, Validations, and Webconsole (oh my!) over to repo-sync, and now every Hanami repo is synced! That’s the entirety of our Dry and Hanami organisations, both fully synced. This also means their releases all come via release-machine, which is what allowed me to link to those nice GitHub release pages earlier in the dotpoint above :)

To celebrate, I took the chance to make a couple nice little improvements to these automation repos. I moved repo-sync’s listing of repos/files to a dedicated config file, and added a scheduled workflow to clean up stale preview branches. I also updated our forum release announcements so they auto-link to GitHub resources, like usernames, PR numbers and commits.

Andrew Pam spoke about new developments in Linux gaming and about hardware and OS support for games etc. He also described some interesting developments with Linux support for SMR disks [1].

Then we discussed Everything Open 2026 [2]. We had some discussion about some of the lectures including the final one which generated controversy (here is the playlist for EO 2026 lectures [3]).

We discussed ideas for running a more effective BOF on a difficult topic like FOSS on mobile phones. The main conclusions seemed to be to have more than 2 people chairing the BOF and more than 1 hour to run it. Maybe a BOF as an introduction to a hack evening.

Then we had discussions about the future of LUGs, the difficulty in getting interest in attending meetings, and to what extent YouTube replaces meetings. One conclusion was that we should publish videos of meetings even if they aren’t going to be interesting to most people. If 99% of people find that watching a meeting they can’t contribute to is boring then we get 1% who are interested and if the number of people who see the video is large enough then 1% becomes a good number.

We finished with discussing Linux promotion. There was a general feeling that a Linux Australia subcommittee dedicated to promoting Linux would be a good thing, I didn’t poll the people do determine who of the people who agreed it was a good idea were actually interested in doing the work.

I (Russell Coker) used my Furilabs FLX1s for the meeting and it worked well running Firefox talking to the Big Blue Button server but unfortunately Firefox wouldn’t work with the camera. This was a reasonable result and the 3 hour meeting used slightly over 50% of the phone’s battery which is much better than a Librem5 or PinePhone Pro could manage. For as yet unknown reasons my desktop PC didn’t want to talk to the webcam that I have been using for years.

The meeting had 10 people attending which isn’t a large meeting but was enough for many interesting discussions.

Topics for future meetings include using storage technology such as SMR disks which we also agreed was a good topic for ongoing discussions in the Matrix room over the course of weeks. Changing storage options is not a trivial thing and not something that can be done quickly or easily.

How to best run BOFs and workshops at conferences was agreed to be a topic that needs more discussion. We are talking about how to get things done efficiently for Everything Open 2027 aleady!

Digital sovereignty was briefly discussed in the meeting and agreed to be a topic for future meetings. It is a complex topic and we will break it down and address separate parts in different meetings. A meeting about “digital sovereignty” on it’s own is probably not going to have enough focus to achieve things. A meeting about a specific topic such as “which cloud to use” can get some good results.

I’ve been experimenting with Claude Code quite a bit recently, and found myself wanting to be able to view my usage at a glance. Unfortunately, Anthropic don’t yet provide this information in a format consumable by a status line helper, so I’ve hacked together a little script that’ll do it for you.

I have it set to show me how much of my 5 hour cap I’ve used (the solid bar), how far through the 5 hour window we are (the * in the progress bar), as well as my 7d usage and how full the context currently is. It’ll change colours to warn you when you’re potentially going to hit your limit.

Here’s a gist with the files you need: copy the scripts to your ~/.claude/scripts directory, and add the settings to your ~/.claude/settings.json. Run ~/.claude/scripts/refresh-usage.sh --force to get fresh data immediately.

Given that it’s unofficial behaviour, it may break or get you banned at any time, but I hope not.

Monday, 20th Jan 2025, 6:00pm (ACDT)

Recording is available at https://www.youtube.com/watch?v=4clkwhrXImY

Attendance record is available upon request

Meeting started at 6:00pm ACDT.

MR JOEL ADDISON, President

Acknowledgment of the traditional owners of the lands on which we meet, particularly the Kaurna people who are the traditional owners of the land that Everything Open 2025 was held on.

MOTION by JOEL ADDISON that the minutes of the Annual General Meeting 2024 of Linux Australia be accepted as complete and accurate.

Seconded by Sae Ra Germaine

Motion Passed with 4 abstentions, no nays

MR JOEL ADDISON – President

The full reports is attached to the annual report, so only a few highlights here:

Questions:

MR NEILL COX – Secretary

Call for people to fill in the attendance sheet.

Again the full report is contained in the Annual Report so just a few highlights here.

MR RUSSELL STUART – Treasurer – Includes presentation of the Auditor’s Report

Questions

Question from Steve Ellis: The 25% is that on revenue or profits?

Response: Profits. There are also other implications [This is a summary of the answer Russell provided see [33:43 of the recording for the full Q&A]

Question from the floor: Of the eight or so what category of non profit seems appropriate or possible?

Response: It turns out we are a scientific institution [again a summary see the recording at 36:01 for the full answer]

Q: You said that if Everything Open didn’t run next year that you would make an $80,000 loss. How could that happen if you don’t run an event.

A[Russell]: No, we wouldn’t make a loss, but we would miss out on the $18,000 profit which is what it made this year. It ranges between that and $40,000 for the last 27 years. [Full answer at 37:18]

Q from Josh Hesketh: What did we provide to Drupal Singapore – was it banks, insurance or other and would we extend that to other conferences in Singapore and further would we consider other countries in the APAC region?

A[Joel]: With that one it was bank accounts and insurance as you mentioned. It’s not certain that we will continue the agreement with the Drupal Association for future conferences. There can also be tax implications. We will assess future conferences as they come up [Full question and answer at 38:48]

Q: Alexar: Is Linux Australia considering having an impact in the APAC region as a strategy or will this just be a case by case approach?

A[Joel]: We’ve always done stuff across Australia and New Zealand and we have supported some other events in the region. We are not ready to commit to a strategic approach without more investigation. For now we are predominantly focussed on Australia and New Zealand but there are opportunities to work with other organisations in the region.

Follow up Question: Can subcommittees pursue similar opportunities?

Follow up Answer: We always say to our subcommittees feel free to bring any ideas to us and we will discuss them with them and go from there.

[Full question and answer at 43:00]

Q [Cherie Ellis]: Is there some way that things can be turned or twisted so that it’s still Everything Open but that LCA or the Linux Australia name is bonded to it so that it becomes the recognizable icon that our sponsors know?

A[Joel]: The sponsors we spoke to understand the alignment.The challenge is that sponsors like IBM are no longer operating in the same way anymore in Australia for that particular area. We managed to find a number of new sponsors this year. Every conference has found that a number of recurring sponsors have said no this year because they can’t afford it or they don’t have the budget. We’ve also had a number of sponsors who signed up and then pulled out in the last two weeks prior to a conference. We expect these challenges to continue over the next 12 months, but we are better prepared for them now. As to bringing LCA back one idea that has been considered is to turn the Linux Kernel Miniconf into LCA as part of Everything Open. That was the intent but we haven’t had enough people step up to make it happen. We do have Carlos from the Open SI institute at the University of Canberra who would like to bring Everything Open to Canberra next year.

[46:45]

Q[Steve Ellis]: Thank you for your support of the community. Do we need a group in Linux Australia focused on sponsorship across all of the events?

A[Joel]: Building a pool of organisations is definitely something we need, not just for sponsorship but also to promote the awareness of the events internally in their organisations. We have discussed setting up some sort of central thing. We could set up a working group and go through some of that. Some of the other conferences have also expressed interest.

[53:48]

Not a Question: Russell forgot to mention that there will be no grants program this year as thet are no profits to pay them from.

[57:31]

Q[Paul Wayper]: Question for the Treasurer: How can the community help Everything Open survive from the ticket price perspective?

A [Russell]: I don’t actually set the budget for the conferences. That’s done by the conference treasurers. I try to give them as much freedom as possible beyond “don’t make a loss”. I don’t have an easy answer for your question.

[58:06]

Returning Officer Julien Goodwin gives his report.

He notes that on examination of the stats Russell and Sae Ra are tied for length of time on the LA Council.

This is Julien’s third time as returning officer.

We have a well-dialed in system now.

The only two things of note are:

Results

Joel: Thank you for coming along to the AGM. I want to say one more thank you to Sae Ra because you have been a big help to me.

Meeting closed at 19:05 ACDT

AGM Minutes Confirmed by 2025 Linux Australia Council

| Joel Addison President |

Jennifer Cox

Vice-President |

Neill Cox

Secretary |

| Russell Stuart

Treasurer |

Lilly Ho

Ordinary Council Member |

Elena Williams

Ordinary Council Member |

| Jonathan Woithe

Ordinary Council Member |

The post 2025 Linux Australia AGM Minutes appeared first on Linux Australia.

I have just got a Furilabs FLX1s [1] which is a phone running a modified version of Debian. I want to have a phone that runs all apps that I control and can observe and debug. Android is very good for what it does and there are security focused forks of Android which have a lot of potential, but for my use a Debian phone is what I want.

The FLX1s is not going to be my ideal phone, I am evaluating it for use as a daily-driver until a phone that meets my ideal criteria is built. In this post I aim to provide information to potential users about what it can do, how it does it, and how to get the basic functions working. I also evaluate how well it meets my usage criteria.

I am not anywhere near an average user. I don’t think an average user would ever even see one unless a more technical relative showed one to them. So while this phone could be used by an average user I am not evaluating it on that basis. But of course the features of the GUI that make a phone usable for an average user will allow a developer to rapidly get past the beginning stages and into more complex stuff.

The Furilabs FLX1s [1] is a phone that is designed to run FuriOS which is a slightly modified version of Debian. The purpose of this is to run Debian instead of Android on a phone. It has switches to disable camera, phone communication, and microphone (similar to the Librem 5) but the one to disable phone communication doesn’t turn off Wifi, the only other phone I know of with such switches is the Purism Librem 5.

It has a 720*1600 display which is only slightly better than the 720*1440 display in the Librem 5 and PinePhone Pro. This doesn’t compare well to the OnePlus 6 from early 2018 with 2280*1080 or the Note9 from late 2018 with 2960*1440 – which are both phones that I’ve run Debian on. The current price is $US499 which isn’t that good when compared to the latest Google Pixel series, a Pixel 10 costs $US649 and has a 2424*1080 display and it also has 12G of RAM while the FLX1s only has 8G. Another annoying thing is how rounded the corners are, it seems that round corners that cut off the content are a standard practice nowadays, in my collection of phones the latest one I found with hard right angles on the display was a Huawei Mate 10 Pro which was released in 2017. The corners are rounder than the Note 9, this annoys me because the screen is not high resolution by today’s standards so losing the corners matters.

The default installation is Phosh (the GNOME shell for phones) and it is very well configured. Based on my experience with older phone users I think I could give a phone with this configuration to a relative in the 70+ age range who has minimal computer knowledge and they would be happy with it. Additionally I could set it up to allow ssh login and instead of going through the phone support thing of trying to describe every GUI setting to click on based on a web page describing menus for the version of Android they are running I could just ssh in and run diff on the .config directory to find out what they changed. Furilabs have done a very good job of setting up the default configuration, while Debian developers deserve a lot of credit for packaging the apps the Furilabs people have chosen a good set of default apps to install to get it going and appear to have made some noteworthy changes to some of them.

The OS is based on Android drivers (using the same techniques as Droidian [2]) and the storage device has the huge number of partitions you expect from Android as well as a 110G Ext4 filesystem for the main OS.

The first issue with the Droidian approach of using an Android kernel and containers for user space code to deal with drivers is that it doesn’t work that well. There are 3 D state processes (uninterrupteable sleep – which usually means a kernel bug if the process remains in that state) after booting and doing nothing special. My tests running Droidian on the Note 9 also had D state processes, in this case they are D state kernel threads (I can’t remember if the Note 9 had regular processes or kernel threads stuck in D state). It is possible for a system to have full functionality in spite of some kernel threads in D state but generally it’s a symptom of things not working as well as you would hope.

The design of Droidian is inherently fragile. You use a kernel and user space code from Android and then use Debian for the rest. You can’t do everything the Android way (with the full OS updates etc) and you also can’t do everything the Debian way. The TOW Boot functionality in the PinePhone Pro is really handy for recovery [3], it allows the internal storage to be accessed as a USB mass storage device. The full Android setup with ADB has some OK options for recovery, but part Android and part Debian has less options. While it probably is technically possible to do the same things in regard to OS repair and reinstall the fact that it’s different from most other devices means that fixes can’t be done in the same way.

The system uses Phosh and Phoc, the GNOME system for handheld devices. It’s a very different UI from Android, I prefer Android but it is usable with Phosh.

Chatty works well for Jabber (XMPP) in my tests. It supports Matrix which I didn’t test because I don’t desire the same program doing Matrix and Jabber and because Matrix is a heavy protocol which establishes new security keys for each login so I don’t want to keep logging in on new applications.

Chatty also does SMS but I couldn’t test that without the SIM caddy.

I use Nheko for Matrix which has worked very well for me on desktops and laptops running Debian.

I am currently using Geary for email. It works reasonably well but is lacking proper management of folders, so I can’t just subscribe to the important email on my phone so that bandwidth isn’t wasted on less important email (there is a GNOME gitlab issue about this – see the Debian Wiki page about Mobile apps [4]).

Music playing isn’t a noteworthy thing for a desktop or laptop, but a good music player is important for phone use. The Lollypop music player generally does everything you expect along with support for all the encoding formats including FLAC0 – a major limitation of most Android music players seems to be lack of support for some of the common encoding formats. Lollypop has it’s controls for pause/play and going forward and backward one track on the lock screen.

The installed map program is gnome-maps which works reasonably well. It gets directions via the Graphhopper API [5]. One thing we really need is a FOSS replacement for Graphhopper in GNOME Maps.

I received my FLX1s on the 13th of Jan [1]. I had paid for it on the 16th of Oct but hadn’t received the email with the confirmation link so the order had been put on hold. But after I contacted support about that on the 5th of Jan they rapidly got it to me which was good. They also gave me a free case and screen protector to apologise, I don’t usually use screen protectors but in this case it might be useful as the edges of the case don’t even extend 0.5mm above the screen. So if it falls face down the case won’t help much.

When I got it there was an open space at the bottom where the caddy for SIMs is supposed to be. So I couldn’t immediately test VoLTE functionality. The contact form on their web site wasn’t working when I tried to report that and the email for support was bouncing.

As a test of Bluetooth I connected it to my Nissan LEAF which worked well for playing music and I connected it to several Bluetooth headphones. My Thinkpad running Debian/Trixie doesn’t connect to the LEAF and to headphones which have worked on previous laptops running Debian and Ubuntu. A friend’s laptop running Debian/Trixie also wouldn’t connect to the LEAF so I suspect a bug in Trixie, I need to spend more time investigating this.

Currently 5GHz wifi doesn’t work, this is a software bug that the Furilabs people are working on. 2.4GHz wifi works fine. I haven’t tested running a hotspot due to being unable to get 4G working as they haven’t yet shipped me the SIM caddy.

This phone doesn’t support DP Alt-mode or Thunderbolt docking so it can’t drive an external monitor. This is disappointing, Samsung phones and tablets have supported such things since long before USB-C was invented. Samsung DeX is quite handy for Android devices and that type feature is much more useful on a device running Debian than on an Android device.

The camera works reasonably well on the FLX1s. Until recently for the Librem 5 the camera didn’t work and the camera on my PinePhone Pro currently doesn’t work. Here are samples of the regular camera and the selfie camera on the FLX1s and the Note 9. I think this shows that the camera is pretty decent. The selfie looks better and the front camera is worse for the relatively close photo of a laptop screen – taking photos of computer screens is an important part of my work but I can probably work around that.

I wasn’t assessing this camera t find out if it’s great, just to find out if I have the sorts of problems I had before and it just worked. The Samsung Galaxy Note series of phones has always had decent specs including good cameras. Even though the Note 9 is old comparing to it is a respectable performance. The lighting was poor for all photos.

In 93 minutes having the PinePhone Pro, Librem 5, and FLX1s online with open ssh sessions from my workstation the PinePhone Pro went from 100% battery to 26%, the Librem 5 went from 95% to 69%, and the FLX1s went from 100% to 99%. The battery discharge rate of them was reported as 3.0W, 2.6W, and 0.39W respectively. Based on having a 16.7Wh battery 93 minutes of use should have been close to 4% battery use, but in any case all measurements make it clear that the FLX1s will have a much longer battery life. Including the measurement of just putting my fingers on the phones and feeling the temperature (FLX1s felt cool and the others felt hot).

The PinePhone Pro and the Librem 5 have an optional “Caffeine mode” which I enabled for this test, without that enabled the phone goes into a sleep state and disconnects from Wifi. So those phones would use much less power with caffeine mode enabled, but they also couldn’t get fast response to notifications etc. I found the option to enable a Caffeine mode switch on the FLX1s but the power use was reported as being the same both with and without it.

One problem I found with my phone is that in every case it takes 22 seconds to negotiate power. Even when using straight USB charging (no BC or PD) it doesn’t draw any current for 22 seconds. When I connect it it will stay at 5V and varying between 0W and 0.1W (current rounded off to zero) for 22 seconds or so and then start charging. After the 22 second display the phone will make the tick sound indicating that it’s charging and the power meter will measure that it’s drawing some current.

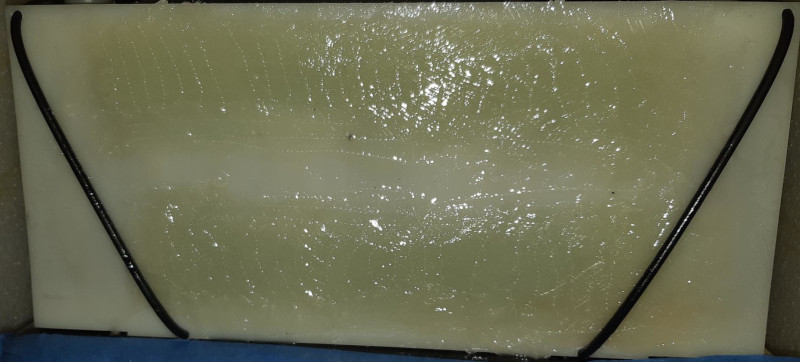

I added the table from my previous post about phone charging speed [6] with an extra row for the FLX1s. For charging from my PC USB ports the results were the worst ever, the port that does BC did not work at all it was looping trying to negotiate after a 22 second negotiation delay the port would turn off. The non-BC port gave only 2.4W which matches the 2.5W given by the spec for a “High-power device” which is what that port is designed to give. In a discussion on the Purism forum about the Librem5 charging speed one of their engineers told me that the reason why their phone would draw 2A from that port was because the cable was identifying itself as a USB-C port not a “High-power device” port. But for some reason out of the 7 phones I tested the FLX1s and the One Plus 6 are the only ones to limit themselves to what the port is apparently supposed to do. Also the One Plus 6 charges slowly on every power supply so I don’t know if it is obeying the spec or just sucking.

On a cheap AliExpress charger the FLX1s gets 5.9V and on a USB battery it gets 5.8V. Out of all 42 combinations of device and charger I tested these were the only ones to involve more than 5.1V but less than 9V. I welcome comments suggesting an explanation.

The case that I received has a hole for the USB-C connector that isn’t wide enough for the plastic surrounds on most of my USB-C cables (including the Dell dock). Also to make a connection requires a fairly deep insertion (deeper than the One Plus 6 or the Note 9). So without adjustment I have to take the case off to charge it. It’s no big deal to adjust the hole (I have done it with other cases) but it’s an annoyance.

| Phone | Top z640 | Bottom Z640 | Monitor | Ali Charger | Dell Dock | Battery | Best | Worst |

|---|---|---|---|---|---|---|---|---|

| FLX1s | FAIL | 5.0V 0.49A 2.4W | 4.8V 1.9A 9.0W | 5.9V 1.8A 11W | 4.8V 2.1A 10W | 5.8V 2.1A 12W | 5.8V 2.1A 12W | 5.0V 0.49A 2.4W |

| Note9 | 4.8V 1.0A 5.2W | 4.8V 1.6A 7.5W | 4.9V 2.0A 9.5W | 5.1V 1.9A 9.7W | 4.8V 2.1A 10W | 5.1V 2.1A 10W | 5.1V 2.1A 10W | 4.8V 1.0A 5.2W |

| Pixel 7 pro | 4.9V 0.80A 4.2W | 4.8V 1.2A 5.9W | 9.1V 1.3A 12W | 9.1V 1.2A 11W | 4.9V 1.8A 8.7W | 9.0V 1.3A 12W | 9.1V 1.3A 12W | 4.9V 0.80A 4.2W |

| Pixel 8 | 4.7V 1.2A 5.4W | 4.7V 1.5A 7.2W | 8.9V 2.1A 19W | 9.1V 2.7A 24W | 4.8V 2.3A 11.0W | 9.1V 2.6A 24W | 9.1V 2.7A 24W | 4.7V 1.2A 5.4W |

| PPP | 4.7V 1.2A 6.0W | 4.8V 1.3A 6.8W | 4.9V 1.4A 6.6W | 5.0V 1.2A 5.8W | 4.9V 1.4A 5.9W | 5.1V 1.2A 6.3W | 4.8V 1.3A 6.8W | 5.0V 1.2A 5.8W |

| Librem 5 | 4.4V 1.5A 6.7W | 4.6V 2.0A 9.2W | 4.8V 2.4A 11.2W | 12V 0.48A 5.8W | 5.0V 0.56A 2.7W | 5.1V 2.0A 10W | 4.8V 2.4A 11.2W | 5.0V 0.56A 2.7W |

| OnePlus6 | 5.0V 0.51A 2.5W | 5.0V 0.50A 2.5W | 5.0V 0.81A 4.0W | 5.0V 0.75A 3.7W | 5.0V 0.77A 3.7W | 5.0V 0.77A 3.9W | 5.0V 0.81A 4.0W | 5.0V 0.50A 2.5W |

| Best | 4.4V 1.5A 6.7W | 4.6V 2.0A 9.2W | 8.9V 2.1A 19W | 9.1V 2.7A 24W | 4.8V 2.3A 11.0W | 9.1V 2.6A 24W |

The Furilabs support people are friendly and enthusiastic but my customer experience wasn’t ideal. It was good that they could quickly respond to my missing order status and the missing SIM caddy (which I still haven’t received but believe is in the mail) but it would be better if such things just didn’t happen.

The phone is quite user friendly and could be used by a novice.

I paid $US577 for the FLX1s which is $AU863 by today’s exchange rates. For comparison I could get a refurbished Pixel 9 Pro Fold for $891 from Kogan (the major Australian mail-order company for technology) or a refurbished Pixel 9 Pro XL for $842. The Pixel 9 series has security support until 2031 which is probably longer than you can expect a phone to be used without being broken. So a phone with a much higher resolution screen that’s only one generation behind the latest high end phones and is refurbished will cost less. For a brand new phone a Pixel 8 Pro which has security updates until 2030 costs $874 and a Pixel 9A which has security updates until 2032 costs $861.

Doing what the Furilabs people have done is not a small project. It’s a significant amount of work and the prices of their products need to cover that. I’m not saying that the prices are bad, just that economies of scale and the large quantity of older stock makes the older Google products quite good value for money. The new Pixel phones of the latest models are unreasonably expensive. The Pixel 10 is selling new from Google for $AU1,149 which I consider a ridiculous price that I would not pay given the market for used phones etc. If I had a choice of $1,149 or a “feature phone” I’d pay $1,149. But the FLX1s for $863 is a much better option for me. If all I had to choose from was a new Pixel 10 or a FLX1s for my parents I’d get them the FLX1s.

For a FOSS developer a FLX1s could be a mobile test and development system which could be lent to a relative when their main phone breaks and the replacement is on order. It seems to be fit for use as a commodity phone. Note that I give this review on the assumption that SMS and VoLTE will just work, I haven’t tested them yet.

The UI on the FLX1s is functional and easy enough for a new user while allowing an advanced user to do the things they desire. I prefer the Android style and the Plasma Mobile style is closer to Android than Phosh is, but changing it is something I can do later. Generally I think that the differences between UIs matter more when on a desktop environment that could be used for more complex tasks than on a phone which limits what can be done by the size of the screen.

I am comparing the FLX1s to Android phones on the basis of what technology is available. But most people who would consider buying this phone will compare it to the PinePhone Pro and the Librem 5 as they have similar uses. The FLX1s beats both those phones handily in terms of battery life and of having everything just work. But it has the most non free software of the three and the people who want the $2000 Librem 5 that’s entirely made in the US won’t want the FLX1s.

This isn’t the destination for Debian based phones, but it’s a good step on the way to it and I don’t think I’ll regret this purchase.

Meeting opened at 20:04 AEDT by Joel and quorum was achieved.

Minutes taken by Neill.

Everything Open was notified that a volunteer has been approved for a working with children check. The council will check whether they deliberately used Linux Australia as they are not volunteering with Everything Open or directly with Linux Australia.

MOTION: Linux Australia adds Dave Sparks and Christopher Burgess to its anz.co.nz mandate as payment authorisers.

I seek a seconder and votes on the motion.

I vote in favour of the motion.

Seconded by Jonathan Woithe

Results: Motion passed

secretary@linux.org.au– Contact the volunteer re their working with children check.

secretary@linux.org.au– Send notification of upcoming election.

Meeting closed at 20:44 AEDT

Next meeting is scheduled for 2026-01-14 and is not a subcommittee meeting, but will be the final meeting for the current council.

The post 2025-12-17 Council Meeting Minutes appeared first on Linux Australia.

Meeting opened at 20:05 AEDT by Joel and quorum was achieved

Minutes taken by Neill.

https://lists.linux.org.au/pipermail/announce/2024-December/000373.html

Secretary to send reminder for membership on the 10th

Dates:

Meeting closed at 21:53 AEDT (UT+11:00)

Next meeting is scheduled for 2025-12-17 at 20:00 AEDT (UT+11:00)

The post 2025-12-03 Council Meeting Minutes appeared first on Linux Australia.

I just read this informative article on ANSI terminal security [1]. The author has written a tool named vt-houdini for testing for these issues [2]. They used to host an instance on their server but appear to have stopped it. When you run that tool you can ssh to the system in question and without needing a password you are connected and the server probes your terminal emulator for vulnerabilities. The versions of Kitty and Konsole in Debian/Trixie have just passed those tests on my system.

This will always be a potential security problem due to the purpose of a terminal emulator. A terminal emulator will often display untrusted data and often data which is known to come from hostile sources (EG logs of attempted attacks). So what could be done in this regard?

Due to the complexity of terminal emulation there is the possibility of buffer overflows and other memory management issues that could be used to compromise the emulator.

The Fil-C compiler is an interesting project [3], it compiles existing C/C++ code with memory checks. It is reported to have no noticeable impact on the performance of the bash shell which sounds like a useful option to address some of these issues as shell security issues are connected to terminal security issues. The performance impact on a terminal emulator would be likely to be more noticeable. Also note that Fil-C compilation apparently requires compiling all libraries with it, this isn’t a problem for bash as the only libraries it uses nowadays are libtinfo and libc. The kitty terminal emulator doesn’t have many libraries but libpython is one of them, it’s an essential part of Kitty and it is a complex library to compile in a different way. Konsole has about 160 libraries and it isn’t plausible to recompile so many libraries at this time.

Choosing a terminal emulator that has a simpler design might help in this regard. Emulators that call libraries for 3D effects etc and native support for displaying in-line graphics have a much greater attack surface.

A terminal emulator could be run in a container to prevent it from doing any damage if it is compromised. But the terminal emulator will have full control over the shell it runs and if the shell has access needed to allow commands like scp/rsync to do what is expected of them then that means that no useful level of containment is possible.

It would be possible to run a terminal emulator in a container for the purpose of connecting to an insecure or hostile system and not allow scp/rsync to/from any directory other than /tmp (or other directories to use for sharing files). You could run “exec ssh $SERVER” so the terminal emulator session ends when the ssh connection ends.

There aren’t good solutions to the problems of terminal emulation security. But testing every terminal emulator with vt-houdini and fuzzing the popular ones would be a good start.

Qubes level isolation will help things in some situations, but if you need to connect to a server with privileged access to read log files containing potentially hostile data (which is a common sysadmin use case) then there aren’t good options.

About 6 months ago I got a Nissan LEAF ZE1 (2019 model) [1]. Generally it’s going well and I’m happy with most things about it.

One issue is that as there isn’t a lot of weight in the front with the batteries in the centre of the car the front wheels slip easily when accelerating. It’s a minor thing but a good reason for wanting AWD in an electric car.

When I got the car I got two charging devices, the one to charge from a regular 240V 10A power point (often referred to as a “granny charger”) and a cable with a special EV charging connector on each end. The cable with an EV connector on each end is designed for charging that’s faster than the “granny charger” but not as fast as the rapid chargers which have the cable connected to the supply so the cable temperature can be monitored and/or controlled. That cable can be used if you get a fast charger setup at your home (which I never plan to do) and apparently at some small hotels and other places with home-style EV charging. I’m considering just selling that cable on ebay as I don’t think I have any need to personally own a cable other than the “granny charger”.

The key fob for the LEAF has a battery installed, it’s either CR2032 or CR2025 – mine has CR2025. Some reports on the Internet suggest that you can stuff a CR2032 battery in anyway but that didn’t work for me as the thickness of the battery stopped some of the contacts from making a good connection. I think I could have got it going by putting some metal in between but the batteries aren’t expensive enough to make it worth the effort and risk. It would be nice if I could use batteries from my stockpile of CR2032 batteries that came from old PCs but I can afford to spend a few dollars on it.

My driveway is short and if I left the charger out it would be visible from the street and at risk of being stolen. I’m thinking of chaining the charger to a tree and having some sort of waterproof enclosure for it so I don’t have to go to the effort of taking it out of the boot every time I use it. Then I could also configure the car to only charge during the peak sunlight hours when the solar power my home feeds into the grid has a negative price (we have so much solar power that it’s causing grid problems).

The cruise control is a pain to use, so much so that I haven’t yet got it to work usefully ever. The features look good in the documentation but in practice it’s not as good as the Kia one I’ve used previously where I could just press one button to turn it on, another button to set the current speed as the cruise control speed, and then just have it work.

The electronic compass built in to the dash turned out to be surprisingly useful. I regret not gluing a compass to the dash of previous cars. One example is when I start google navigation for a journey and it says “go South on street X” and I need to know which direction is South so I don’t start in the wrong direction. Another example is when I know that I’m North of a major road that I need to take to get to my destination so I just need to go roughly South and that is enough to get me to a road I recognise.

In the past when there is a bird in the way I don’t do anything different, I keep driving at the same speed and rely on the bird to see me and move out of the way. Birds have faster reactions than humans and have evolved to move at the speeds cars travel on all roads other than freeways, also birds that are on roads are usually ones that have an eye in each side of their head so they can’t not see my car approaching. For decades this has worked, but recently a bird just stood on the road and got squashed. So I guess that I should honk when there’s birds on the road.

Generally everything about the car is fine and I’m happy to keep driving it.

One of the problems I encountered with the PinePhone Pro (PPP) when I tried using it as a daily driver [1] was the charge speed, both slow charging and a bad ratio of charge speed to discharge speed. I also tried using a One Plus 6 (OP6) which had a better charge speed and battery life but I never got VoLTE to work [2] and VoLTE is a requirement for use in Australia and an increasing number of other countries. In my tests with the Librem 5 from Purism I had similar issues with charge speed [3].

What I want to do is get an acceptable ratio of charge time to use time for a free software phone. I don’t necessarily object to a phone that can’t last an 8 hour day on a charge, but I can’t use a phone that needs to be on charge for 4 hours during the day. For this part I’m testing the charge speed and will test the discharge speed when I have solved some issues with excessive CPU use.

I tested with a cheap USB power monitoring device that is inline between the power cable and the phone. The device has no method of export so I just watched it and when the numbers fluctuated I tried to estimate the average. I only give the results to two significant digits which is about all the accuracy that is available, as I copied the numbers separately the V*A might not exactly equal the W. I idly considered rounding off Voltages to the nearest Volt and current to the half amp but the way the PC USB ports have voltage drop at higher currents is interesting.

This post should be useful for people who want to try out FOSS phones but don’t want to buy the range of phones and chargers that I have bought.

I have seen claims about improvements with charging speed on the Librem 5 with recent updates so I decided to compare a number of phones running Debian/Trixie as well as some Android phones. I’m comparing an old Samsung phone (which I tried running Droidian on but is now on Android) and a couple of Pixel phones with the three phones that I currently have running Debian for charging.

The Librem 5 had problems with charging on a port on the HP ML110 Gen9 I was using as a workstation. I have sold the ML110 and can’t repeat that exact test but I tested on the HP z640 that I use now. The z640 is a much better workstation (quieter and better support for audio and other desktop features) and is also sold as a workstation.

The z640 documentation says that of the front USB ports the top one can do “fast charge (up to 1.5A)” with “USB Battery Charging Specification 1.2”. The only phone that would draw 1.5A on that port was the Librem 5 but the computer would only supply 4.4V at that current which is poor. For every phone I tested the bottom port on the front (which apparently doesn’t have USB-BC or USB-PD) charged at least as fast as the top port and every phone other than the OP6 charged faster on the bottom port. The Librem 5 also had the fastest charge rate on the bottom port. So the rumours about the Librem 5 being updated to address the charge speed on PC ports seem to be correct.

The Wikipedia page about USB Hardware says that the only way to get more than 1.5A from a USB port while operating within specifications is via USB-PD so as USB 3.0 ports the bottom 3 ports should be limited to 5V at 0.9A for 4.5W. The Librem 5 takes 2.0A and the voltage drops to 4.6V so that gives 9.2W. This shows that the z640 doesn’t correctly limit power output and the Librem 5 will also take considerably more power than the specs allow. It would be really interesting to get a powerful PSU and see how much power a Librem 5 will take without negotiating USB-PD and it would also be interesting to see what happens when you short circuit a USB port in a HP z640. But I recommend not doing such tests on hardware you plan to keep using!

Of the phones I tested the only one that was within specifications on the bottom port of the z640 was the OP6. I think that is more about it just charging slowly in every test than conforming to specs.

The next test target is my 5120*2160 Kogan monitor with a USB-C port [4]. This worked quite well and apart from being a few percent slower on the PPP it outperformed the PC ports for every device due to using USB-PD (the only way to get more than 5V) and due to just having a more powerful PSU that doesn’t have a voltage drop when more than 1A is drawn.

The Ali Charger is a device that I bought from AliExpress is a 240W GaN charger supporting multiple USB-PD devices. I tested with the top USB-C port that can supply 100W to laptops.

The Librem 5 has charging going off repeatedly on the Ali charger and doesn’t charge properly. It’s also the only charger for which the Librem 5 requests a higher voltage than 5V, so it seems that the Librem 5 has some issues with USB-PD. It would be interesting to know why this problem happens, but I expect that a USB signal debugger is needed to find that out. On AliExpress USB 2.0 sniffers go for about $50 each and with a quick search I couldn’t see a USB 3.x or USB-C sniffer. So I’m not going to spend my own money on a sniffer, but if anyone in Melbourne Australia owns a sniffer and wants to visit me and try it out then let me know. I’ll also bring it to Everything Open 2026.

Generally the Ali charger was about the best charger from my collection apart from the case of the Librem 5.

I got a number of free Dell WD15 (aka K17A) USB-C powered docks as they are obsolete. They have VGA ports among other connections and for the HDMI and DisplayPort ports it doesn’t support resolutions higher than FullHD if both ports are in use or 4K if a single port is in use. The resolutions aren’t directly relevant to the charging but it does indicate the age of the design.

The Dell dock seems to not support any voltages other than 5V for phones and 19V (20V requested) for laptops. Certainly not the 9V requested by the Pixel 7 Pro and Pixel 8 phones. I wonder if not supporting most fast charging speeds for phones was part of the reason why other people didn’t want those docks and I got some for free. I hope that the newer Dell docks support 9V, a phone running Samsung Dex will display 4K output on a Dell dock and can productively use a keyboard and mouse. Getting equivalent functionality to Dex working properly on Debian phones is something I’m interested in.

The “Battery” I tested with is a Chinese battery for charging phones and laptops, it’s allegedly capable of 67W USB-PD supply but so far all I’ve seen it supply is 20V 2.5A for my laptop. I bought the 67W battery just in case I need it for other laptops in future, the Thinkpad X1 Carbon I’m using now will charge from a 30W battery.

There seems to be an overall trend of the most shonky devices giving the best charging speeds. Dell and HP make quality gear although my tests show that some HP ports exceed specs. Kogan doesn’t make monitors, they just put their brand on something cheap. Buying one of the cheapest chargers from AliExpress and one of the cheaper batteries from China I don’t expect the highest quality and I am slightly relieved to have done enough tests with both of those that a fire now seems extremely unlikely. But it seems that the battery is one of the fastest charging devices I own and with the exception of the Librem 5 (which charges slowly on all ports and unreliably on several ports) the Ali charger is also one of the fastest ones. The Kogan monitor isn’t far behind.

The Samsung Galaxy Note 9 was released in 2018 as was the OP6. The PPP was first released in 2022 and the Librem 5 was first released in 2020, but I think they are both at a similar technology level to the Note 9 and OP6 as the companies that specialise in phones have a pipeline for bringing new features to market.

The Pixel phones are newer and support USB-PD voltage selection while the other phones either don’t support USB-PD or support it but only want 5V. Apart from the Librem 5 which wants a higher voltage but runs it at a low current and repeatedly disconnects.

One of the major problems I had in the past which prevented me from using a Debian phone as my daily driver is the ratio of idle power use to charging power. Now that the phones seem to charge faster if I can get the idle power use under control then it will be usable.

Currently the Librem 5 running Trixie is using 6% CPU time (24% of a core) while idle and the screen is off (but “Caffeine” mode is enabled so no deep sleep). On the PPP the CPU use varies from about 2% and 20% (12% to 120% of one core), this was mainly plasmashell and kwin_wayland. The OP6 has idle CPU use a bit under 1% CPU time which means a bit under 8% of one core.

The Librem 5 and PPP seem to have configuration issues with KDE Mobile and Pipewire that result in needless CPU use. With those issues addressed I might be able to make a Librem 5 or PPP a usable phone if I have a battery to charge it.

The OP6 is an interesting point of comparison as a Debian phone but is not a viable option as a daily driver due to problems with VoLTE and also some instability – it sometimes crashes or drops off Wifi.

The Librem 5 charges at 9.2W from a a PC that doesn’t obey specs and 10W from a battery. That’s a reasonable charge rate and the fact that it can request 12V (unsuccessfully) opens the possibility to potential higher charge rates in future. That could allow a reasonable ratio of charge time to use time.

The PPP has lower charging speeds then the Librem 5 but works more consistently as there was no charger I found that wouldn’t work well with it. This is useful for the common case of charging from a random device in the office. But the fact that the Librem 5 takes 10W from the battery while the PPP only takes 6.3W would be an issue if using the phone while charging.

Now I know the charge rates for different scenarios I can work on getting the phones to use significantly less power than that on average.

The 57W battery or something equivalent is something I think I will always need to have around when using a PPP or Librem 5 as a daily driver.

The ability to charge fast while at a desk is also an important criteria. The charge speed of my home PC is good in that regard and the charge speed of my monitor is even better. Getting something equivalent at a desktop of an office I work in is a possibility.

Improving the Debian distribution for phones is necessary. That’s something I plan to work on although the code is complex and in many cases I’ll have to just file upstream bug reports.

I have also ordered a FuriLabs FLX1s [5] which I believe will be better in some ways. I will blog about it when it arrives.

| Phone | Top z640 | Bottom Z640 | Monitor | Ali Charger | Dell Dock | Battery | Best | Worst |

|---|---|---|---|---|---|---|---|---|

| Note9 | 4.8V 1.0A 5.2W | 4.8V 1.6A 7.5W | 4.9V 2.0A 9.5W | 5.1V 1.9A 9.7W | 4.8V 2.1A 10W | 5.1V 2.1A 10W | 5.1V 2.1A 10W | 4.8V 1.0A 5.2W |

| Pixel 7 pro | 4.9V 0.80A 4.2W | 4.8V 1.2A 5.9W | 9.1V 1.3A 12W | 9.1V 1.2A 11W | 4.9V 1.8A 8.7W | 9.0V 1.3A 12W | 9.1V 1.3A 12W | 4.9V 0.80A 4.2W |

| Pixel 8 | 4.7V 1.2A 5.4W | 4.7V 1.5A 7.2W | 8.9V 2.1A 19W | 9.1V 2.7A 24W | 4.8V 2.3A 11.0W | 9.1V 2.6A 24W | 9.1V 2.7A 24W | 4.7V 1.2A 5.4W |

| PPP | 4.7V 1.2A 6.0W | 4.8V 1.3A 6.8W | 4.9V 1.4A 6.6W | 5.0V 1.2A 5.8W | 4.9V 1.4A 5.9W | 5.1V 1.2A 6.3W | 4.8V 1.3A 6.8W | 5.0V 1.2A 5.8W |

| Librem 5 | 4.4V 1.5A 6.7W | 4.6V 2.0A 9.2W | 4.8V 2.4A 11.2W | 12V 0.48A 5.8W | 5.0V 0.56A 2.7W | 5.1V 2.0A 10W | 4.8V 2.4A 11.2W | 5.0V 0.56A 2.7W |

| OnePlus6 | 5.0V 0.51A 2.5W | 5.0V 0.50A 2.5W | 5.0V 0.81A 4.0W | 5.0V 0.75A 3.7W | 5.0V 0.77A 3.7W | 5.0V 0.77A 3.9W | 5.0V 0.81A 4.0W | 5.0V 0.50A 2.5W |

| Best | 4.4V 1.5A 6.7W | 4.6V 2.0A 9.2W | 8.9V 2.1A 19W | 9.1V 2.7A 24W | 4.8V 2.3A 11.0W | 9.1V 2.6A 24W |

I am not entirely sure what makes the AI debate so polarizing, although I suspect it has something to do with feeling threatened by a changing landscape. What I can say with certainty is that I find having a nuanced conversation about AI with people often difficult. It seems to me that people fall into two polar opposite camps — those who thing AI is completely great and that we should fire all the programmers and creatives; and those who think that AI is all bad and we should go backwards in time to a place before it existed.

Honestly, I think neither end of the spectrum is right. I should admit here that my own stance is significantly more nuanced that it was six months ago, but having now used various code assistant LLMs for a few months there are clearly useful contributions it makes to my work day. I think the elevator pitch would be something like “AI is a useful tool if treated like very smart autocomplete in tightly constrained environments”. I would even go so far as to say that one of the measures of “AI maturity” of a code base should be how tight the constraints are — unit tests, functional tests, static analysis, and so forth don’t go away with AI generated code — they are in fact more important than ever.

This is where the inexperienced developers will trip themselves up. If they’re not reviewing the generated code closely and course correcting the machine as required, then they’re likely to end up with a mess that isn’t performant or maintainable. The human still needs to know what “good” looks like. I think version control is key here too, because being able to walk backwards when you went down a dead end remains just as important as ever.

The thing is the current state of the art for code generation AIs is about as good as a junior developer. It requires just as much supervision, coaching, and prompting to think about the right things. However, just as you wouldn’t get a junior developer to mentor another junior developer, I am not sure that everyone has the skills required to adequately supervise the current state of the art in code generation.

I am on vacation, as I am sure many people are at this time of year. This means the usual things for me — cleaning up the home office, this time in the most annoying manner I can think of; playing with personal projects I haven’t had time for during the year; and just… resting. Part of resting for me is reading, which is how I happened up this excellent blog post about how the expectations placed upon Silicon Valley engineering managers have changed over the last couple of decades.

The post resonates strongly with me — I think the idea that the expectations placed upon managers have changed in noticeable eras is true, but it also explains my own mixed feelings about the Silicon Valley of today. You see, as the industry became less passionate about treating engineers well and building things which genuinely improved the world over the last couple of years, I became less passionate about being treated poorly by my employers. It is definitely true that an employer is within their rights to let you know that you’re a replaceable asset of convenience, which I think is definitely a thing Cisco reinforced as often as possible, but the inverse is also true. If this employment thing is a purely commercial relationship, then it is simply rational for me to take a better offer if it comes along without feeling any guilt.

That is, I wonder if the industry will enjoy reaping what they are currently sowing when those better opportunities do inevitably come along?

As I bid adieu to 2025, annus horribilis, I wish to welcome 2026, Annus Mirabilis.

What changed from Hello 2025?

Fell in love with Pumpkin, Vanessa’s dog. I only did 41 days of travel, 7 trips, 13 cities and 36,049 miles. This was basically covid 2020 ;)

Ushered it in Kuala Lumpur 2025 and 2026.

I look forward to having a better year ahead. Best year as 42 is coming, and that is the answer to life, the universe and everything.

Know whom my friends are. Know who were fair weather friends. Very enlightening 2025 has been. You’ll eat, just not at my table.

Ever onwards. And upwards.

I’ve had a Creality CFS upgrade for my K1 Max sitting on my workbench for probably a month waiting for me to install it. Part of that delay is that I knew it would take a while to install, and I am glad I waited.